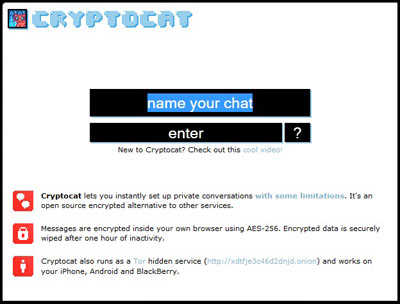

Alhamdulillah! Finally, a technologist designed a security tool that everyone could use. A Lebanese-born, Montreal-based computer scientist, college student, and activist named Nadim Kobeissi had developed a cryptography tool, Cryptocat, for the Internet that seemed as easy to use as Facebook Chat but was presumably far more secure.

Encrypted communications are hardly a new idea. Technologists wary of government surveillance have been designing free encryption software since the early 1990s. Of course, no tool is completely safe, and much depends on the capabilities of the eavesdropper. But for years digital safety tools have been so hard to use that few human rights defenders and even fewer journalists (my best guess is one in a 100) employ them.

Activist technologists often complain that journalists and human rights defenders are either too lazy or foolish to not consistently use digital safety tools when they are operating in hostile environments. Journalists and many human rights activists, for their part, complain that digital safety tools are too difficult or time-consuming to operate, and, even if one tried to learn them, they often don’t work as expected.

Cryptocat promised to finally bridge these two distinct cultures. Kobeissi was profiled in The New York Times; Forbes and especially Wired each praised the tool. But Cryptocat’s sheen faded fast. Within three months of winning a prize associated with The Wall Street Journal, Cryptocat ended up like a cat caught in storm–wet, dirty, and a little worse for wear. Analyst Christopher Soghoian–who wrote a Times op-ed last fall saying that journalists must learn digital safety skills to protect sources–blogged that Cryptocat had far too many structural flaws for safe use in a repressive environment.

An expert writing in Wired agreed. Responding to another Wired piece just weeks before, Patrick Ball said the prior author’s admiration of Cryptocat was “inaccurate, misleading and potentially dangerous.” Ball is one of the Silicon Valley-based nonprofit Benetech developers of Martus, an encrypted database used by groups to secure information like witness testimony of human rights abuses.

But unlike Martus, which encrypts its data locally on the users’ machine, the original version of Cryptocat was a host-based security application that relied on servers to provide the code for the encryption and decryption of any online chatting. That made Cryptocat potentially vulnerable to attack without the end user knowing.

So we are back to where we started, to a degree. Other, older digital safety tools are “a little harder to use, but their security is real,” Ball added in Wired. Yet, in the real world, from Mexico to Ethiopia, from Syria to Bahrain, how many human rights activists, journalists, and others actually use them? “The tools are just too hard to learn. They take too long to learn. And no one’s going to learn them,” a journalist for a major U.S. news organization recently told me.

Who will help bridge the gap? Information-freedom technologists clearly don’t build free, open-source tools to get rich. They’re motivated by the recognition one gets from building an exciting, important new tool. (Kind of like journalists breaking a story.) Training people in the use of security tools or making those tools easier to use doesn’t bring the same sort of credit.

Or financial support. Donors–in good part, U.S. government agencies–tend to back the development of new tools rather than ongoing usability training and development. But in doing so, technologists and donors are avoiding a crucial question: Why aren’t more people using security tools? These days–20 years into what we now know as the Internet–usability testing is key to every successful commercial online venture. Yet it is rarely practiced in the Internet freedom community.

That may be changing. The anti-censorship circumvention tool Tor has grown progressively easier to use, and donors and technologists are now working to make it easier and faster still. Other tools, like Pretty Good Privacy or its slightly improved free alternative GnuPG, still seem needlessly difficult to operate. Partly because the emphasis is on open technology built by volunteers, users are rarely if ever redirected how to get back on track if they make a mistake or reach a dead end. This would be nearly inconceivable today with any commercial application designed to help users purchase a service or product.

Which brings us back to Cryptocat, the ever-so-easy tool that was not as secure as it was once thought to be. For a time, the online debate among technologists degenerated into the kind of vitriol one might expect to hear among, say, U.S. presidential campaigns. But wounds have since healed and some critics are now working with Kobeissi to help clean up and secure Cryptocat.

Life and death, prison and torture remain real outcomes for many users, and, as Ball noted in Wired, there are no security shortcuts in hostile environments. But if tools remain too difficult for people to use in real-life circumstances in which they are under duress, then that is a security problem in itself.

The lesson of Cryptocat is that more learning and collaboration are needed. Donors, journalists, and technologists can work together more closely to bridge the gap between invention and use.

Updated: We updated this entry in the sixth paragraph to clarify how the original version Cryptocat worked.